Backend Platforms - Azure Batch - With OneCompute

Follow the tutorial to create your client and worker projects

The tutorial shows you how to use OneCompute to create and run a workload using Azure Batch as the back-end platform. Having followed the tutorial you should now have a client application and worker project that is configured to actually run a workload on Azure Batch.

We will now take a look at the code that is actually related directly to using Azure Batch as opposed to another back-end service.

Worker Project: Configure

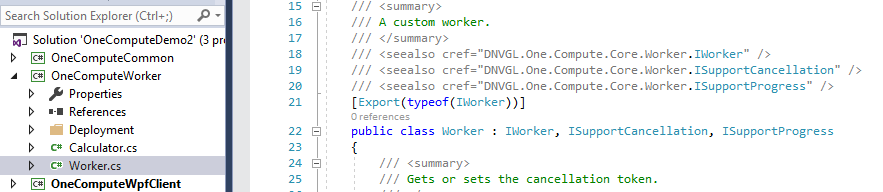

The DNV GL OneCompute Worker Class Library project template (see here) will provide you with a worker project that can then be hosted by a OneCompute WorkerHost. In order to simplify the deployment process for Azure Batch, the project template references the Azure Batch worker host. This is not necessary for compilation but makes your life easier when it comes to packaging your worker.

The project includes a file Worker.cs, containing the class Worker..

This class provides the glue between your workload code and OneCompute and abstracts away any dependency on a specific host.

It contains an asynchronous ExecuteAsync method where you hook up your compute code...

# Worker Project Code Block:

/// <summary>

/// Executes the task work unit.

/// </summary>

/// <param name="statusNotificationService">The status notification service.</param>

/// <param name="workUnit">The work unit.</param>

/// <param name="dependencyResults">The dependency results.</param>

/// <returns>

/// A <see cref="MyResult"/> instance.

/// </returns>

/// <exception cref="System.ArgumentNullException">statusNotificationService</exception>

/// <exception cref="System.Exception">Work unit data not found.</exception>

public async Task<object> ExecuteAsync(IWorkerExecutionStatusNotificationService statusNotificationService, IWorkUnit workUnit, IEnumerable<Result> dependencyResults)

{

this.StatusNotificationService = statusNotificationService ?? throw new ArgumentNullException(nameof(statusNotificationService));

// Obtain the input data for this execution - replace MyInput with your own POCO class representing your input data

var myInput = workUnit.Data.Get<MyInput>();

if (myInput == null)

{

throw new Exception("Work unit data not found.");

}

// Execute your workload - replace this section with a call to an asynchronous method that calls your code.

// Your code can be included in this class, a referenced Assembly or even Platform Invoked to call into an unmanaged DLL.

var numberToSquare = myInput.NumberToSquare;

this.UpdateStatus(0.0, $"Calculation of square of '{numberToSquare}' is started."); // Make a status call to the OneCompute status monitor

var result = await SquareAsync(numberToSquare);

// After workload completion, make a status call to the OneCompute status monitor.

if (result.Cancelled)

{

this.UpdateStatus(0.0, $"Calculation of square of '{numberToSquare}' was cancelled.", WorkStatus.Aborted);

}

else

{

this.UpdateStatus(1.0, $"Calculation of square of '{numberToSquare}' was completed. Result is '{result.Result}'.", WorkStatus.Completed);

}

return result.Result;

}

Your compute code can be any code you add to the project, or you can reference another Assembly or even make a Platform Invoke call to an un-managed DLL.

Worker Project: Deploy Worker packages to Azure Batch

Azure Batch needs to be supplied with the Azure Batch worker host and your worker library in the form of an Application Package. At the moment we do have scripts to help you produce Application Packages but we don't yet have deployment tooling - we hope to achieve this in a future release.

Note

For detailed information on Azure Batch Application Packages read the Microsoft documentation here.

Please see here for information on constructing application packages using our scripts.

Once you have your application package zip file you can deploy these manually to your Azure Batch Account via the Azure Portal. Please follow the Microsoft instructions in the note above about how to do this.

Tip

However, manual steps are not convenient as part of a development workflow. For information on how to automate Application Package deployment to Azure Batch please read here.

Client Application: Specify the Application Packages that the job will utilize

If you now look at the client application code. The template has code to help you construct a OneCompute Job.

In the DefineApplicationPackagesForJob() method you specify the application packages that the job will use.

# Client Application Code Block:

var deploymentModel = new DeploymentModel();

deploymentModel.AddApplicationPackage("<insertyourpackagenamehere>");

// Add any additional packages here..

...

job.DeploymentModel = deploymentModel;

Client Application: Configure Azure Batch Service Credentials

The Azure Batch service is an Azure resource and is therefore protected from anonymous use. That means that our client needs to authenticate itself in order to access the Azure Batch APIs.

There are several methods of achieving this. The easiest method is to use Azure Batch Shared Key credentials. This is what we will describe here. For alternative mechanism please read the help documentation on Security.

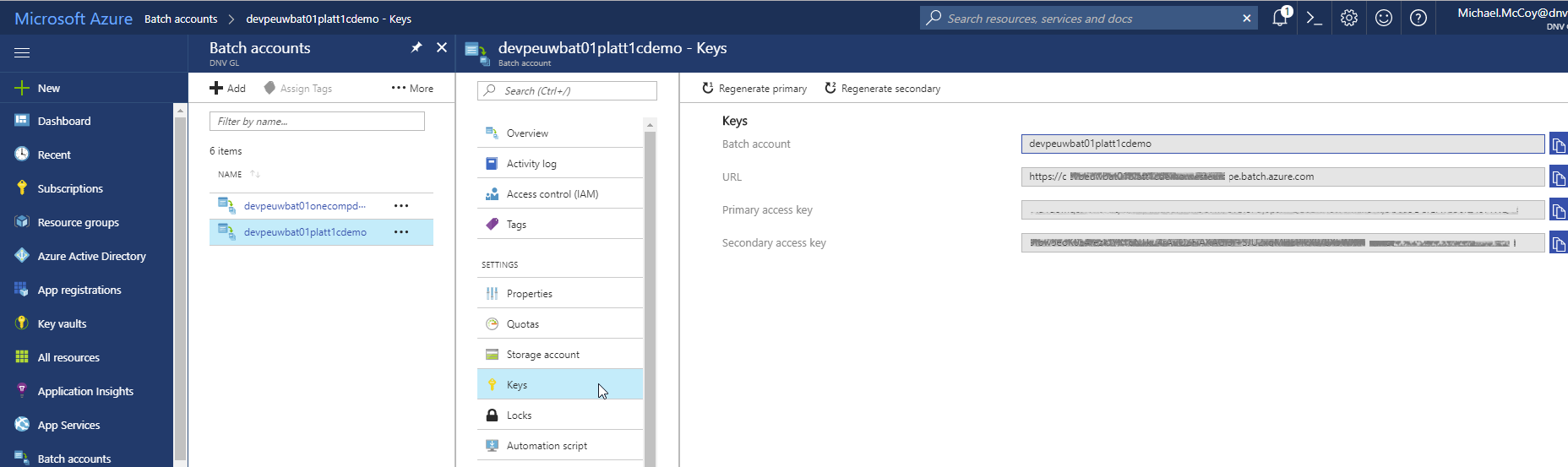

You obtain your batch credentials from the Azure Batch Service blade of the Azure Portal. Navigate to your Azure Batch resource and then click on the Keys blade....

Azure Batch Service Credentials

The code to specify the credentials looks like this. Just plug in the values for the 3 fields that you pulled from the portal.

# Client Application Code Block:

var batchCredentials = new BatchCredentials

{

BatchServiceAuthenticationMechanism = BatchAuthenticationMechanism.BatchAccountAccessKeyAuthentication,

BatchAccountName = "<enter value copied from Batch Account field>",

BatchAccountUrl = "<enter value copied from URL field>",

BatchAccountKey = "<enter value copied from Primary Access Key field>"

};

Client Application: Create a OneCompute Scheduler for interaction with Azure Batch

OneCompute has a scheduler class AzureBatchWorkItemScheduler that will schedule units of work on the Azure Batch service. One approach is to inherit your own scheduler class from this so that you can override the GetWorkerHostPath method. This allows you to make a runtime decision for each WorkUnit as to which worker package you want to use - which is very handy when you have a collection of worker packages. It is also very useful when you have a parallel set of tasks with a concluding reduction task.

The work item scheduler constructor takes a few parameters. You need to specify the name of the Pool (within your Azure Batch Service resource) of compute nodes which you want the job scheduled on. You also pass the batch credentials which we established above.

The remaining 3 parameters relate to storage services. For detail on this please see read help specific to storage services here.

An example scheduler class implementation is shown below....

# Client Application Code Block:

/// <summary>

/// Work scheduler for the application

/// </summary>

public class MyAzureBatchWorkItemScheduler : AzureBatchWorkItemScheduler

{

/// <summary>

/// Initializes a new instance of the <see cref="MyAzureBatchWorkItemScheduler" /> class.

/// </summary>

/// <param name="poolName">The Azure Batch pool name.</param>

/// <param name="batchCredentials">The credentials for the Azure Batch Service.</param>

/// <param name="workItemStorageService">The work item storage service.</param>

/// <param name="workItemStatusService">The task status service.</param>

/// <param name="resultStorageService">The result storage service.</param>

public MyAzureBatchWorkItemScheduler(string poolName, BatchCredentials batchCredentials, IFlowModelStorageService<WorkItem> workItemStorageService, IWorkItemStatusService workItemStatusService, IFlowModelStorageService<Result> resultStorageService)

: base(poolName, batchCredentials, workItemStorageService, workItemStatusService, resultStorageService)

{

}

/// <summary>

/// Gets the worker host path.

/// </summary>

/// <param name="job">

/// The job.

/// </param>

/// <param name="workUnit">

/// The work unit.

/// </param>

/// <param name="workerSettings">

/// The worker settings.

/// </param>

/// <returns>

/// The worker host path for submission to the work processor.

/// </returns>

protected override string GetWorkerHostPath(Job job, WorkUnit workUnit, FlowModelWorkerSettings workerSettings)

{

const string MyAppPackage1 = "AZ_BATCH_APP_PACKAGE_<MYPACKAGENAME1>";

const string MyAppPackage2 = "AZ_BATCH_APP_PACKAGE_<MYPACKAGENAME2>";

var appPackage = workUnit.IsReductionTask ? MyAppPackage2 : MyAppPackage1;

return $@"cmd /c %{appPackage}%\DNVGL.One.Compute.WorkerHost.AzureBatch.exe";

}

}

Client Application: Provide scheduler with storage connection string

The scheduler is passed the storage services but there are two approaches to informing the deployed worker of the storage connection to use.

The first is to pass the connection string as a property when we are constructing the work units. In this case we tell the scheduler to configure the CloudTask environment from the work unit properties as so...

# Client Application Code Block:

((AzureBatchWorkItemScheduler)this.workScheduler).SetEnvironmentFromProperties = true;

If using this approach, you need to configure each of your work units with the connection string as an environment property.

# Client Application Code Block:

workUnit.Properties.Add(EnvironmentVariablesNames.OneComputeStorageConnectionString, storageConnectionString);

Alternatively, we tell the scheduler to force all work units to use the supplied connection string via this call...

# Client Application Code Block:

this.workScheduler.OverrideWorkerStorageConnectionString(storageConnectionString);

Client Application: Prepare a unit of work for execution on Azure Batch

A OneCompute Job is constructed of WorkUnits. A WorkUnit takes its input parameters in the form of a POCO (Plain Old CLR Object) that is declared in a shared library dependency between the client and the worker.

It has further properties that allow you to configure the behaviour of individual work units. Such as the frequency that status/progress updates will be allowed to fire back to the client.

# Client Application Code Block:

// In your common library that your client and worker projects take a dependency on, you can define an input data class. This will take the parameters needed as input to a unit of work that will be deployed to a compute node.

var myPocoInput = new MyPocoInputClass { /* somedata */}

// Now construct a unit of work passing it your input parameter object

new WorkUnit

{

Data = new DataContainer(myPocoInput),

StatusUpdateFrequency = 20 // Limit updates to every 20 seconds

};