Architecture Overview

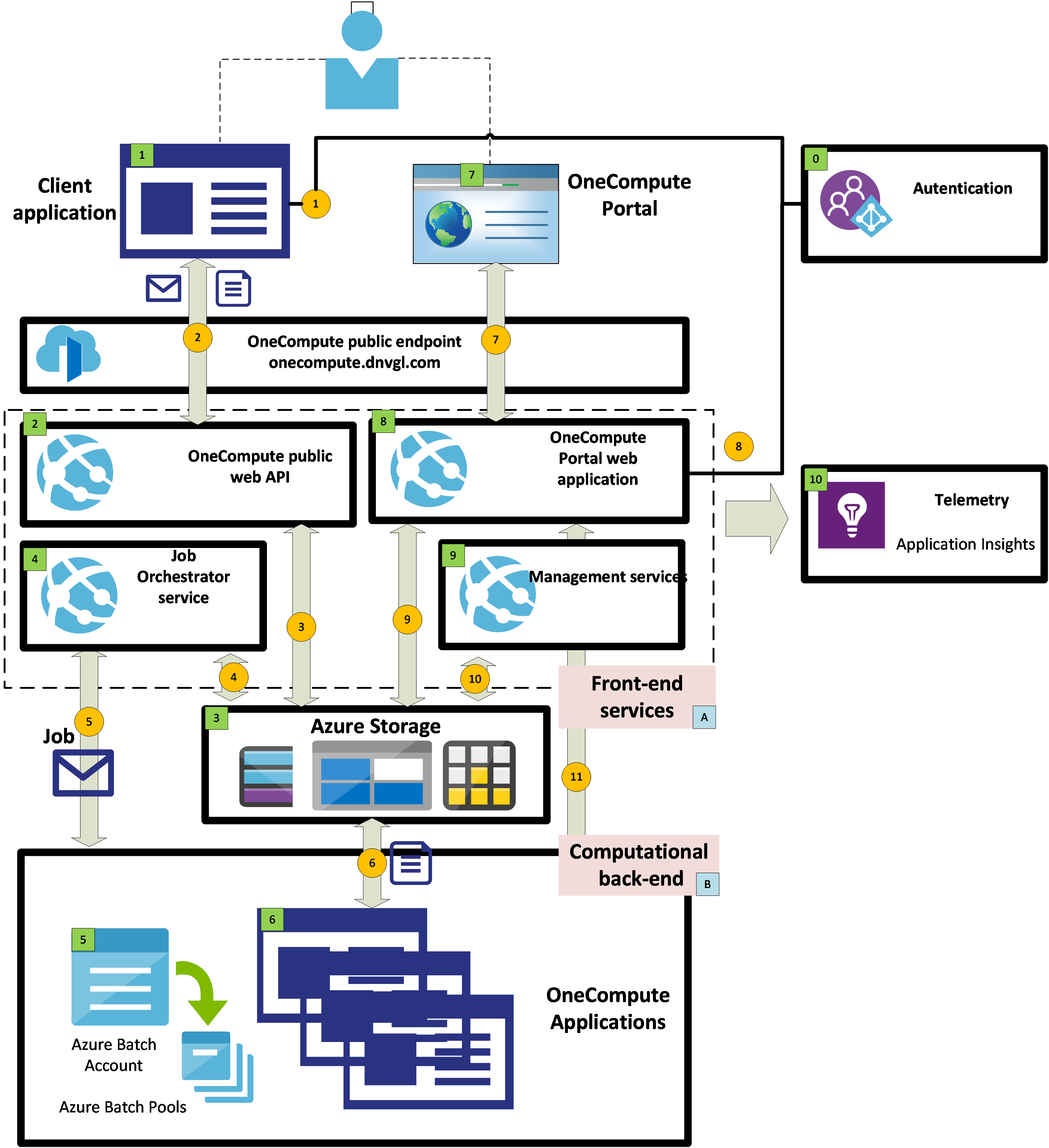

The figure below depicts the One Compute Platform architecture. The client application uses the OneCompute cloud service to run its analyses. At the top level, OneCompute is split in two parts: A. The Front-end Services, represented by - The OneCompute Platform web API, - The OneCompute Portal, - The Job Orchestration Service, as well as - various management tasks B. The Computational back-end, represented by an - Azure Batch account containing the various node pools that run the OneCompute applications as well as the - Application packages deployed to these pools.

Figure: OneCompute Platform Architecture

Figure: OneCompute Platform Architecture

Explaining the OneCompute Platform Architecture

The following paragraphs attempts to explain the OneCompute Platform architecture in terms of how it would be used by an interactive client application.

NOTE OneCompute Platform supports not only interactive, user-initiated workflows. Service-initiated workflows using client credential are supported as well.

Clients

- Client application [1]: A client application (desktop, mobile, web, script) that submits analysis jobs (data flow #1) through the OneCompute public web API [2] to be executed by one of the deployed OneCompute Applications [6] running in a dynamically scaled Azure Batch pool [5]. During execution of the job, the client may regularly query detailed status for each task in the job through the OneCompute web API [2]. As each task completes, the result files can be downloaded from Azure blob storage [2].

- OneCompute Portal [7]. A web site that allows the user to inspect the status and consumption of jobs, browse the user�s private blob container in Azure Storage [5] and get consumption reports. Accountants can create consumption reports, admins can grant users access to applications, operators can manage the pools in the Batch account and application owners can manage their applications.

Front-end Services

Functional blocks in the figure above are numbered. In the following, they are referenced by numbers in brackets:

- OneCompute public web API [2]. A service that provides a REST API supporting job scheduling, job status and progress and URIs with access tokens for accessing files in Azure Blob Storage [3]. Jobs submitted by the client application through the OneCompute API is put on an Azure Storage Queue [3].

- Job Scheduler and Orchestrator [3]. A service that takes jobs from the job queue [3], schedules an Azure Batch job for the Sesam Wind application [6] deployed with the Azure Batch account [5] in the Computational Backend [B].

- OneCompute Portal web application [8]. A web application that hosts the OneCompute Portal [7].

- Management Services [9]. A set of scheduled or message driven services that perform various long-running management tasks, such as storage consumption logging, accounting and storage cleanup.

- Telemetry [10]. Each front-end service is configured to emit telemetry to Application Insights.

Computational Back-end

- Azure Batch account [5]. The Batch account contains pools and application packages. Auto-scaling is used to provision compute the capacity that is required to execute the jobs. All configuration of pools and applications can be done from the OneCompute Portal [7].

- OneCompute Applications [6]. These are executable programs that executes the computational workflow prescribed by the Client applications [1]. They are file-based console applications that run in batch. Input files are read from the user�s blob private blob container in Azure Storage [3]. Result files are transferred back to Azure blob storage upon task completion.

Data Flows

Data Flows initiated by the client application

- Using the client application [1], the user authenticates (1) with Veracity [0], the DNV Azure AD tenant.

- Using the client application [1], the user submits a OneCompute job (2) to OneCompute.

- The OneCompute web API [2] posts a "job submission" message (3) on the jobs queue in Azure Storage [3]

- The OneCompute Job Orchestrator picks a job (4) from the jobs queue and schedules a job (5) with Azure Batch [5].

- As the application worker of the OneCompute Application [6] executes a particular Batch task in the job, it downloads input files (4) from the user's container in Azure blob storage and invokes the Sesam analysis engines appropriate for the task. It also updates task status and progress (4) in dedicated Azure storage tables [3].

- Upon successful completion of a task, the Sesam Wind Application worker uploads output files (4) to Azure blob storage and sets task task status to completed (4). Upon task failure, task status is set to faulted (4) and output files are uploaded (4) to Azure blob storage.

- While a job executes, the Client Application regularly queries task status and progress (1). The API will subsequently request task status and progress (3) from the task status table in Azure table storage [3].

- Upon task completion, the Client Application requests access to blob storage (1) and downloads output files from blob storage [3].

Data Flows initiated by the OneCompute Portal

- When opening the OneCompute Portal, the portal redirects (8) to Veracity [0], the DNV Azure AD tenant, for authentication. The user authenticates with Veracity [0].

- The user views job details or browses files (2) in his private blob container in Azure Storage [3].

- The OneCompute Portal queries Azure table storage for job details and blob storage for user files and renders their content (2).

- Operators and application managers may use the OneCompute Portal to manage Batch pools and application packages (11) in the Azure Batch account [5].