Overview of OneCompute Libraries

- Introduction

- What is OneCompute Libraries?

- OneCompute Architectural Overview

- Supported Back End Platforms

Introduction

The main value provided by DNV software products stem from their advanced analysis capabilities. Bringing these capabilities into a "cloud-first" world should be of top priority for our ecosystem projects. This will not only protect our main IP but will also dramatically increase its value. The reason for this is that users will be able achieve more with better results, faster and with less effort.

Moving an analysis work flow to the cloud is an end-to-end solution that requires tight data security, access control to the computational services, data management and resource management. OneCompute Libraries does not purport to be an end-to-end solution, thus leaving room for it to be applied in different environments. It will, however, provide key building blocks for creating such solutions within each ecosystem.

What is OneCompute Libraries?

OneCompute Libraries is a set of components and services for:

- Modelling a computational workflow. This computational workflow is known as a job.

- Scheduling the job for execution. The job may constitute sequential and parallel components that need to be orchestrated to execute in a particular order.

- Orchestrating the job during execution, ensuring that tasks are processed in the correct order.

- Monitoring the execution of jobs, including their status and progress.

- Building back end compute services to execute the work defined by a job. Back end components are optional. It is possible to build completely custom back end solutions without using OneCompute but still use OneCompute for modelling, scheduling and monitoring computational workflows.

One key feature of OneCompute is the removal of back-end dependencies from the application code. This enables applications to benefit from deploying to, and running in, different back-end contexts/environments. OneCompute provides an abstraction over the back-end computational resources where the work is actually executed. This provides the flexibility to run the computations on an individual device, on-premise servers or with third-party cloud providers, such as Microsoft Azure or Amazon AWS.

OneCompute Architectural Overview

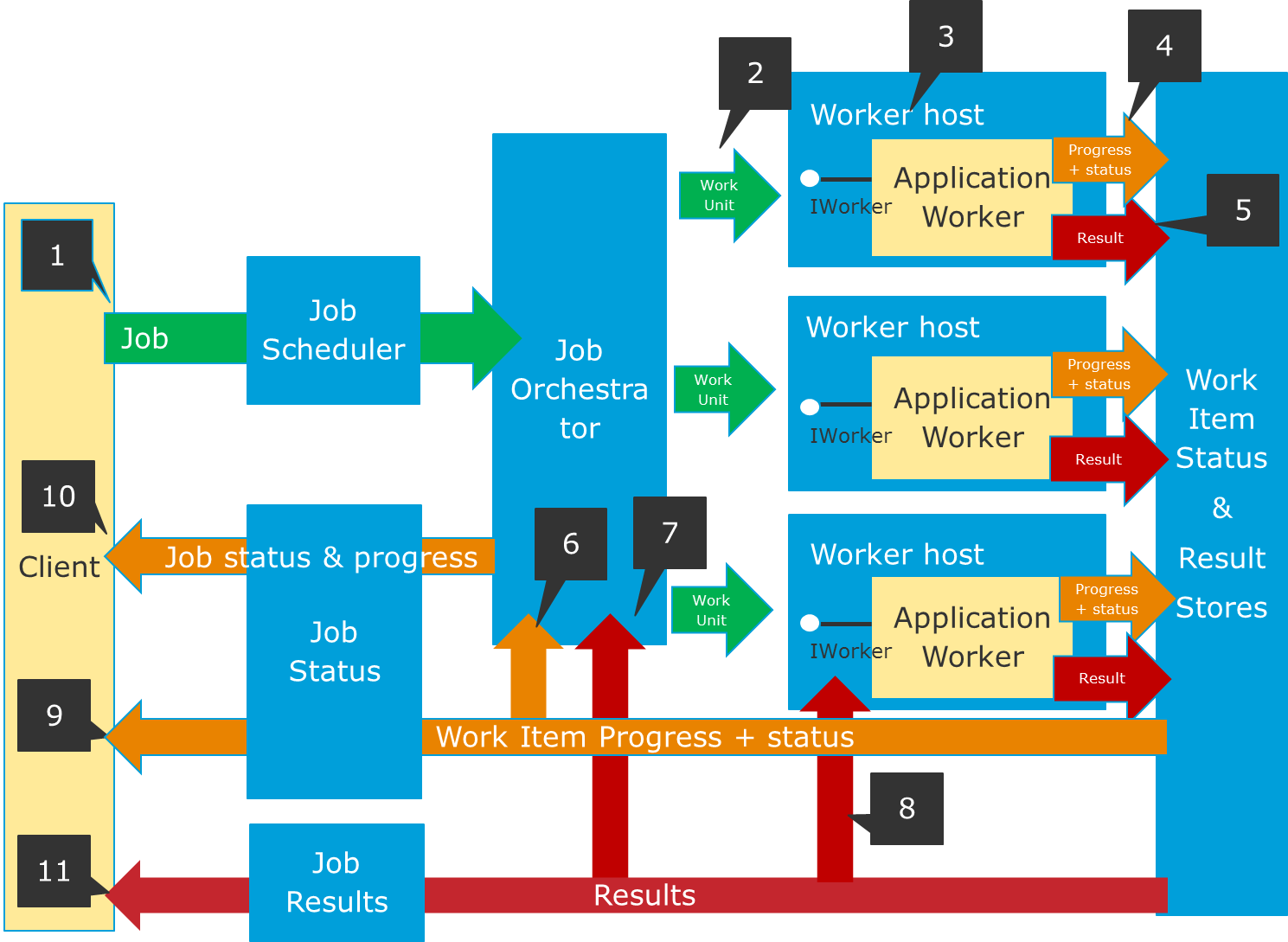

Figure 1 depicts OneCompute and the data flow resulting from a client application submitting a compute job to a OneCompute-based compute service. OneCompute components are marked in blue and application components are marked in yellow. The arrows represent the data flow. Each of these data flows has a separate data contract in the system. The labels in the figure refer steps in the process that are explained in Table 3 below.

Figure 1: OneCompute Architectural Overview

Data Contracts

| Data contract | Description | Used by | Figure 1 Labels |

|---|---|---|---|

| Job | A job consists of a set of Work Items, representing the computational work flow (see the Concepts page for more details). | Client, Job Scheduler | 1 |

| Work Unit | A Work Unit is the Work Item that represents computational work at the most granular level. A Work Unit wraps application specific input data that will be unwrapped and processed by the Application Worker. | Client, Job Orchestrator, Worker Host, Application Worker | 2 |

| Work item Progress & Status | During execution of a particular Work Unit, progress and status events are produced by the Application Worker. The WorkerHost stores this information at regular intervals in the Work Item Status Store. The Client can request progress and status for one or more WorkUnits from the Job Status Service. | Application Worker, Worker Host, Job Orchestrator, Work Item Status Store, Job Status Service, Client | 4, 6, 8, 11 |

| Job Progress & Status | During execution of a particular Job, the Job Orchestrator aggregates progress and status for each WorkUnit in the job and stores it in the Job Status Store. The Client can request job progress and status for the job from the Job Status Service. | Client, Job Orchestrator, Job Status Service, Job Status Store | 10 |

| Result | The Result is a "sibling" of the Work Unit. It wraps application specific output data from the Worker. When the Worker completes processing a Work Unit, it returns a Result. | Application Worker, Result Store, Job Orchestrator, Job Results Service, Client | 5, 7, 9 |

Table 1: OneCompute Data Contracts

Services and Service Contracts

| Service | Description | Service contract implemented |

|---|---|---|

| Job Scheduler | The Job Scheduler service takes a job submitted by the client application and delegates its execution to a dedicated Job Orchestrator. | OneCompute Job Scheduler contract |

| Job Orchestrator | The Job Orchestrator (initiated from the Job Scheduler) splits the job into individual Work Units and orchestrates the execution of the job for the lifetime of the job. | OneCompute Job Orchestrator contract |

| WorkerHost | The WorkerHost receives a WorkUnit to be executed. The WorkerHost is the module that the job orchestrator invokes, through the use of the back end platform services, to run the Application Worker. | Back-end platform dependent contracts + OneCompute WorkerHost contract |

| Application Worker | The Application Worker is an application component that receives a WorkUnit to execute, any WorkUnits it depends on and their Results. It executes and returns a Result object. The Application Worker can be made completely independent of the back end platform. | OneCompute Worker contract |

| Work Item Status Store | Stores progress and status for any given work item. | OneCompute Work Item Status Entity contract |

| Result Store | Stores Result objects for any given work item. | OneCompute Work Item Result Entity contract |

| Job Status Service | Query service for job and work item status and progress. | OneCompute Job and work item status service contracts |

| Work Item Result Service | Query service for work item results. | OneCompute Job work item result service contracts |

Table 2: OneCompute Services and Service Contracts

Execution and Data Flows

The sequence depicted in Figure 1 above can be described as follows:

| Label | Step | Explanation |

|---|---|---|

| 1 | The Client submits a Job to the OneCompute Job Scheduler service. | A Job is a composition of Work Units, and their relationships determine the workflow of the job. The OneCompute Job Scheduler creates a Job Orchestrator for the job and delegates job orchestration to the job orchestrator. |

| 2 | The job orchestrator splits the job into individual Work Units and orchestrates the execution of the job | Job orchestration means ensuring that Work Units are processed by the back end in the correct order and aggregating status and progress information for the entire job. How the orchestration is carried out is dependent on the back end platform. However, the orchestrator must ensure that the back end executes work units in the correct order and as efficiently as possible, for instance by ensuring that any work that can be done in parallel is executed in parallel. |

| 3 | The WorkerHost receives a WorkUnit to be executed | The WorkerHost is the module that the orchestrator invokes, by using the back end platform services, to run the application code on the back end. The WorkerHost depends on the back end platform. In Azure Batch, for instance, the WorkerHost is an executable that is scheduled to run for each Work Unit. In Service Fabric, it would be a service. The WorkerHost receives the WorkUnit to be processed, creates an Application Worker and lets the Application Worker process the WorkUnit. The Application Worker is an application component implementing the IWorker contract. This enables isolation between the Application Worker and the back end platform, ensuring that the same Application Worker may run on different back end platforms. Consumers of OneCompute are responsible for creating a worker implementation that satisfies the IWorker contract. The OneCompute library currently provides WorkerHost implementations for a variety of different back end platforms (Windows Console, Azure Batch and REST end points). This library is open and extensible, and other back end platforms can be added as needed, either by OneCompute or by 3rd parties. |

| 4 | Progress and status events are posted by the Application Worker while processing the Work Unit and received by the WorkerHost | The WorkerHost will post progress and status at regular intervals to the Work Item Progress and Status Store, currently using Azure Storage platform services or SQLite. Note that only the last status or progress record posted for each Work Item is retained, i.e. the last record received overwrites any previous record and are not accumulated like messages in a log. |

| 5 | Upon finishing processing the Work Unit, the Application Worker creates a Result object and wraps application specific result information into it. | The Result object is returned to the WorkerHost, and the WorkerHost posts it to the Result Store. The lookup key for the result is the Work Unit id, thus enabling later lookup of the result for a particular Work Unit. |

| 6 | The Job Orchestrator monitors execution of all Work Units in the job | While doing so, it aggregates progress and status for the job and stores the aggregated job status and progress in the Job Status Store. Note that only the last status or progress record posted for each job is retained, i.e. the last record received overwrites any previous record and they do not accumulate like messages in a log. |

| 7 | The WorkerHost first retrieves the Results of the dependencies of the WorkUnit from the Result Store. | The Application Worker will receive both the WorkUnit as well as the results from execution of its dependencies. |

| 8 | The Client requests status and progress for one or more WorkUnits of a particular job from the Job Status service | |

| 9 | The Client requests status and progress for a particular job from the Job Status service | |

| 10 | The Client requests Results for one or more WorkUnits of a particular job from the Job Results service |

Table 3: Job scheduling and execution sequence

Back End Platforms

See the Back End Platforms section for a description of the currently supported backend platforms for OneCompute.